Your server is configured for Austria, that is why it has three values for Austria only. The other lines are countries that are not assigned to your server, thus netspeed value doesn’t make sense, and fraction between DNS query fraction and netspeed fraction doesn’t exist.

The first permyriad value for Austria is the fraction of DNS queries with the address of your server in it that come from clients that the pool thinks are in Austria in comparison to all queries the pool sees from Austria (see my previous post above).

And the second permyriad value, which exists for Austria only, is what fraction the netspeed you have set for your server is in relation to the sum of all netspeeds of all servers configured for that same country.

The last number is the relation of the two permyriad values, i.e., the factor 0.86 in your case. This value compares the share that you should get based on the netspeed setting of your server in relation to the sum of all netspeed settings, vs. the share of actual DNS queries (as rough indicator of actual NTP traffic) you get from the country (or more precisely, queries to which the pool’s response contains your server’s IP address).

In “healthy” zones, in my experience, the fraction often tends to be slightly below 1. Large deviations from 1 indicate some “issue” (not necessarily problematic) with the zone.

E.g., when there are just two servers in a zone, one with 3Gbit, the other with 512Kbit, the latter will typically get a fraction much larger than one (indicating it gets much more traffic than what its share of the overall sum of netspeed values would suggest), because the load sharing simply does not work with two servers only. Thus, the ratio of the two permyriad values will be somewhat larger than 1.

Or could just be because the two values are taken at different timescales: The netspeed fraction is near instantaneous (some caching delay in the system maybe), i.e., a value at one instant in time. The DNS fraction on the other hand is the average accumulated over several days. I.e., after a netspeed change, it will take some time until the new netspeed share will also be reflected in the DNS share. Or some other, “big” server vanishes, or is added, resulting in an “immediate” change in other servers’ netspeed share, but DNS shares will take time to adjust. (@avij references the explanation for the different timescales in a parallel thread.)

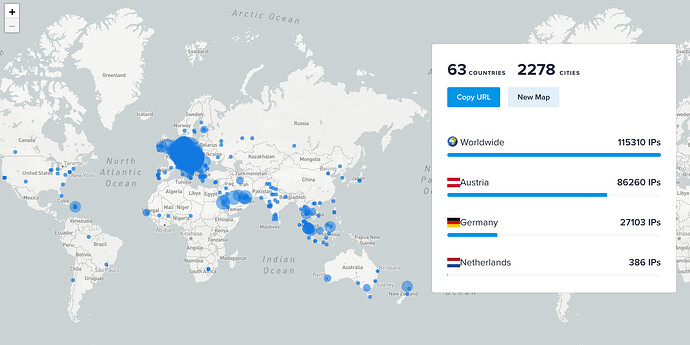

I think the value of slightly below 1 for “healthy” zones is because some of the queries from that zone go to other zones. I.e., the sum of queries from a zone going to that zone is slightly smaller than the sum of all queries from that zone. (That is why you see DNS permyriad values for other countries: Those are “leaking” from the respective other zones to the Austria zone, i.e., clients from Germany and the USA (and probably other countries, but in numbers too small to appear in the list) that get responses with your server’s IP address in them. The other way round, when you query the pool from your IP address in Austria and get the IP address of a server in Switzerland (e.g., because you query for the named country or continent zone, rather than the “global” zone, that would contribute to that Swiss server’s permyriad value for queries from Austria.)

By the way, maybe adding some additional confusion: The lines are sorted by absolute number of DNS queries from the respective countries that are answered with your server’s IP address. I.e., could be, e.g., that the DNS permyriad value of the second line is larger than the DNS permyriad value of the first line, because the absolute number of queries from the country in the first line answered with your server’s IP address is larger than the absolute number of queries from the country in the second line that have been answered with your server’s IP address in the response.

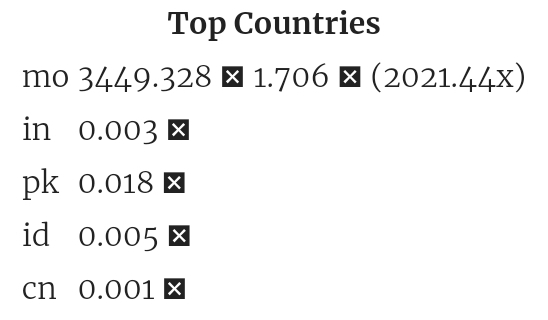

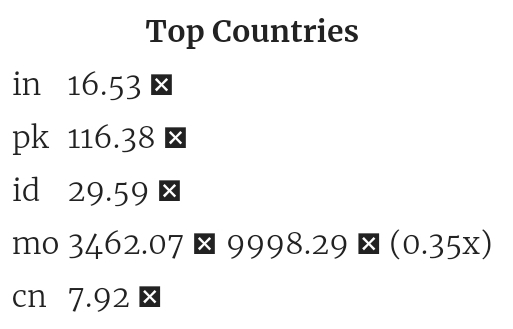

E.g., the following:

This server gets more queries from clients in the US zone than from the ID zone in absolute numbers, that is why the US line is above the ID line. But from among all the queries from each respective country, this server gets a fraction of queries in the US zone (0.0265%) in comparison to all queries from the US zone that is smaller than the fraction of queries it gets from the ID zone in comparison to all queries from the ID zone (0.3087%).